Artificial Intelligence (AI) is being rapidly integrated into many sectors, including education. Proponents argue that AI can support learners, automate administrative tasks, and even assist in content delivery and assessment. However, in vocational education and training (VET)—where accuracy, practical skill development, and contextual understanding are paramount—relying on AI is fraught with problems. As various studies and real-world experiences show, the current capabilities of AI can make it unsuitable, unreliable, and even unethical for use in this educational field.

Before you call me a Luddite, let me just say; I love using new technology and I have been pushing for the adoption of new technology in VET for over 10 years. I was one of the first trainers in the world to introduce e-learning to the building industry, and I am fluent in many learning management systems and instructional design software. I use A.I. in my work almost every day. Technology has been revolutionising education, in most cases for the better. However, like most technologies, A.I. has been overhyped, and it’s time to call out how it is being misused.

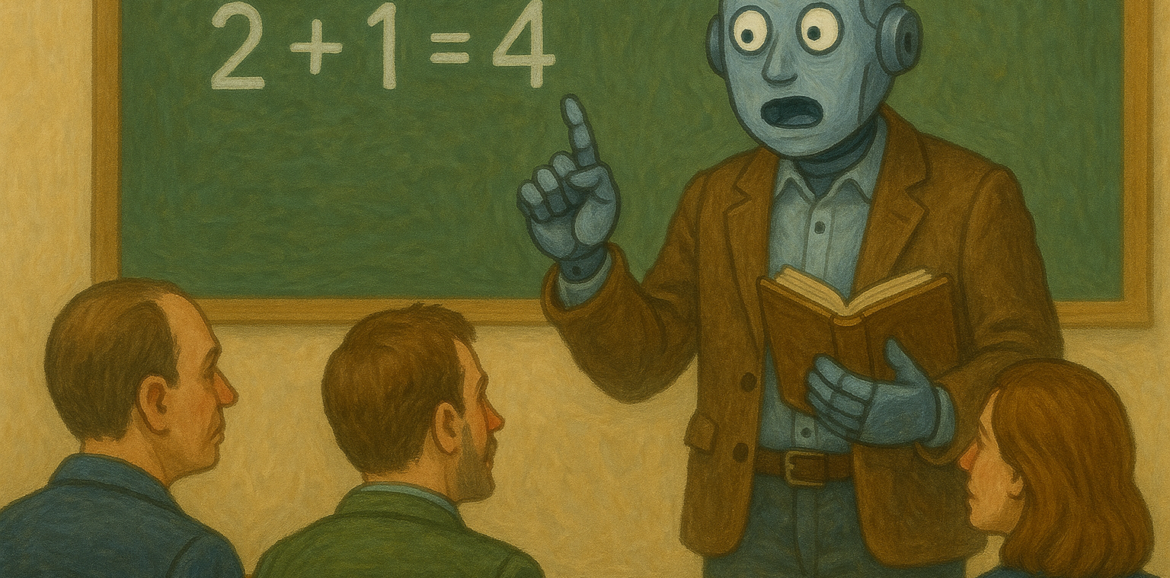

The A.I. Emperor Has No Clothes!

1. AI Hallucinates—And Can’t Be Trusted with Facts

One of the most significant concerns is that AI tools like ChatGPT and similar models often generate “hallucinations”—plausible but false information. Us tradies like to call a spade ‘a spade’, so let’s get real; A.I. is a fully automated bullshit generator. According to recent reporting by TechRadar, even as AI models become more advanced, their tendency to hallucinate is getting worse. For example, GPT-4.5 exhibits hallucination rates of over 37%, while smaller versions reach up to 79% in some tasks.

Educators at Washington State University are so concerned about the amount of bullshit being generated they even created an on-line course about it entitled “Modern-Day Oracles or Bullshit Machines?”

This is because of how LLMs work; they are basically just a fancy predictive text app. They are fed huge amounts of data and programmed to predict the likely answer to any question you give them. In most cases they are really, really good at doing this. But when they don’t know the answer they will never tell you. They are programmed to give an answer even if it’s wrong.

In 2024 Edubytes, a not-for-profit organisation based in Australia, evaluated learning content for construction trades that was created using A.I. Over 30% of the content was found to be factually incorrect. That’s right; RTOs using this content are basically teaching their apprentices ‘bullshit’.

This is not a harmless quirk. In VET, which includes practical trades like carpentry, welding, or healthcare support, misinformation can have real-world consequences. Teaching a student the wrong safety procedure or providing an incorrect tool specification could result in injury or failure on the job.

In contrast, human educators are accountable for their sources and are typically required to use curriculum-approved references. Errors can still occur, but they are more likely to be identified and corrected through peer review, regulation, and professional experience.

2. AI Undermines Critical Thinking and Research Skills

VET education is not just about passing tests—it is about learning how to solve problems, use tools, make judgements, and adapt to changing circumstances. Teachers are increasingly reporting that students are bypassing these learning opportunities by relying on AI-generated content. According to one educator cited in Futurism, students no longer read, analyse, or think critically. Instead, they copy AI-generated essays or answers, often without understanding the content.

This dependency undermines the development of core competencies that vocational fields require. For instance, a plumbing student who doesn’t learn to read schematics properly or critically evaluate a fault in the system cannot be expected to perform competently on the job. The same applies to aged care, automotive repair, and countless other fields where decision-making and adaptability are essential.

3. AI-Generated Content vs. Human-Researched Content

Human-created educational content typically follows rigorous development processes. Qualified trainers refer to accredited training packages, workplace procedures, regulatory guidelines, and industry standards when creating learning material. This ensures that what is taught aligns with legislative requirements, workplace safety standards, and the requirements of the training package.

AI-generated content lacks this structured approach. It is often based on probabilistic word prediction rather than factual recall. While AI can produce content that sounds sophisticated, it is not necessarily accurate or verifiable. Furthermore, AI does not cite sources unless instructed to do so, and even then it can fabricate citations—a serious ethical breach in academic and professional settings.

For comparison, if a human trainer repeatedly delivered lessons that were 37% wrong and included made-up references, they would quickly lose their job and credibility. This leads us to a sobering thought experiment:

If AI Were a Human, Would It Be Employable?

Imagine AI as a job applicant in a Registered Training Organisation. It submits resumes with falsified references, gives answers that sound right but are frequently wrong, refuses to acknowledge its mistakes unless challenged, and occasionally discloses private student data without permission. Would you hire this person?

The answer is clearly “no.” Yet, this is the performance standard we are accepting when we integrate AI into VET delivery and assessment.

4. Grading by AI Is Unreliable and Unfair

Another significant concern is the use of AI in grading assessments. The University of Georgia tested a model called Mixtral, which was asked to mark student work. Without human-created marking rubrics, the AI only matched human markers 33.5% of the time. Even with a rubric, it was barely more than 50% accurate.

Earlier this year Edubytes tested models used by VET software developers used by RTOs in Australia, and found that A.I. almost never marked student’s work correctly, and was oblivious to nuances in student’s responses based on their personal experiences.

This inconsistency is unacceptable in formal education. Student results must be fair, consistent, and based on clear criteria. In VET, where competencies often form the basis for workplace licensing, this is even more critical. An incorrectly failed student may be prevented from working. Conversely, a student who is wrongly passed may enter the workplace unprepared and unsafe.

5. Ethical and Privacy Risks in AI Integration

The ethical concerns surrounding AI in education go beyond just grading. There are significant issues related to privacy and data collection. For example, in 2025, the New South Wales Department of Education was caught off guard when Microsoft Teams began collecting biometric data (like facial and voice data) from students without proper notice or consent.

This kind of data harvesting, particularly involving minors and vulnerable learners, raises major red flags. Human educators operate under strict codes of conduct and legal obligations regarding privacy and data protection. AI systems, governed by vague terms of service and corporate interests, do not offer the same level of trust or accountability.

6. AI’s Lack of Human Context and Practical Experience

Vocational education is about more than transferring knowledge—it involves coaching, mentorship, and the transfer of tacit knowledge that can only come from hands-on experience. AI has never changed a set up a spray-gun, welded a beam, or provided care to an elderly person. It cannot replicate the practical, nuanced insight that a human trainer offers.

Moreover, human trainers can adjust content based on the learner’s needs, observe signs of confusion, provide encouragement, and adapt strategies to ensure comprehension. AI cannot interpret non-verbal cues, understand emotional context, or respond empathetically to a struggling student.

7. Bias and Misinformation

AI systems reflect the data on which they were trained—which includes all the biases and prejudices of the internet. There have been documented cases where AI platforms have generated discriminatory or false statements, even labelling public figures incorrectly in terms of gender, sexual orientation, or personal beliefs. In vocational education, where inclusiveness, accuracy, and cultural awareness are crucial, these kinds of errors can be damaging and divisive.

8. AI Can’t Be Held Accountable

When a human trainer makes a mistake, they can be questioned, retrained, or disciplined. Their qualifications and conduct are regulated. AI, on the other hand, is a product—owned by corporations with no legal requirement to ensure educational accuracy or ethical conduct. If a student is harmed by an AI’s mistake, who is responsible? This lack of accountability is unacceptable in any learning environment, but especially so in VET, where outcomes can impact employment, safety, and personal livelihoods.

Proceed with Caution—If at All

Artificial Intelligence is not inherently evil, nor should it be banned entirely from the educational landscape. It can be a useful tool when applied carefully, under strict supervision, and for non-assessment purposes such as administrative support or to support RTO software functions.

However, when it comes to vocational education and training, the stakes are too high to risk the inaccuracies, biases, and ethical shortcomings of current AI systems. Until AI can match the reliability, accountability, and contextual understanding of human educators—and until there are robust systems in place for oversight, validation, and learner protection—it should not be used for training delivery, assessment, or curriculum development in VET.

We can’t blame RTOs or education managers for making poor choices. The push to use A.I. in VET is not coming from the education industry; it’s coming from software companies and technology companies keen to profit from unsuspecting RTOs. They don’t care about actual outcomes, because they aren’t responsible or accountable for whether students are actually learning anything.

To ask again: if AI were a human, would you trust it to educate your apprentices, certify your next nurse, or train your future electrician? If the answer is no, then it should not be trusted in the classroom either.

For great content crafted by industry experts, real intelligence, and qualified human educators, check out the Edubytes web-site

Author: Daniel Wurm is an multi-award winning instructional designer with 18 years experience in vocational education and training in the construction and manufacturing industries. He holds a Diploma in Vocational Education and Training and is Managing Director of Edubytes.